Relion

Overview

Relion is program that employs an empirical Bayesian approach to refinement of (multiple) 3D reconstructions or 2D class averages in electron cryo-microscopy (cryo-EM). It is developed in the group of Sjors Scheres at the MRC Laboratory of Molecular Biology.

Version 5.0 is installed on CSF3. It has been compiled from source using the Intel v19.1.1 compiler and OpenMPI 4.1.1. Currently only the GPU version has been compiled. The Relion user-interface has been enabled.

Versions 3.0.6, 3.0.8, 3.1-beta, 3.1-beta2 and 3.1.0 are installed on CSF3. They have been compiled from source using the Intel v18.0.3 compilers and OpenMPI 3.1.4. Versions are available for CPU-only usage (with and without CPU Acceleration functions compiled) and GPU (Nvidia v100) usage. All versions have been compiled with the Relion user-interface enabled.

Older versions 2.1 and 3.0-beta are also installed. They have been compiled from source using the Intel v17.0.7 compilers and OpenMPI 3.1.1. Versions are available for CPU-only usage (non accelerated) and GPU (Nvidia v100) usage. All versions have been compiled with the Relion user-interface enabled.

Some of the CPU-only versions use the Relion-accelerated CPU code compiled with Intel Compiler multiple code-path optimizations for AVX, AVX2 and AVX512 architectures. This means the application can be run on any of the CSF3 compute nodes and some functions will use optimized versions for the CPU in that compute node.

Restrictions on use

There are no restrictions on accessing this software on the CSF. The software is released under the GNU GPL v2 license. The authors request you cite the use of the software in your publications – please see the Relion website for details.

Set up procedure

To access the software you must first load one of the following modulefiles, according to whether you wish to run on CPU-only or GPU nodes:

5.0.0 version

This version has been compiled with the Intel 19.1.2 compiler.

# Released 5.0.0 version module load apps/intel-19.1/relion/5.0.0-gpu-sp-all # Single-precision GPU

The modulefile apps/intel-19.1/relion/VERSION-ARCH-all is a convenience modulefile to load several other modulefile that you will usually need to use relion successfully. It will load the following modulefiles:

apps/intel-19.1/relion/VERSION-ARCH # Matches the version in the -all name apps/intel-19.1/ctffind/4.1.14 # ctffind3 is also available on the CSF3 apps/intel-19.1/unblur/1.0.2 apps/intel-19.1/summovie/1.0.2 apps/binapps/gctf/1.06

3.1.0 versions

This version has been compiled with the Intel 18.0.3 compiler.

# NOTE: Currently the CSF3 will only do single-node multi-core CPU and GPU jobs. You cannot # run larger multi-node jobs. # Released 3.1.0 version module load apps/intel-18.0/relion/3.1.0-gpu-sp-all # Single-precision GPU module load apps/intel-18.0/relion/3.1.0-cpuacc-sp-all # Single-precision CPU-Accelerated module load apps/intel-18.0/relion/3.1.0-cpu-sp-all # Single-precision CPU

The modulefile apps/intel-18.0/relion/VERSION-ARCH-all is a convenience modulefile to load several other modulefile that you will usually need to use relion successfully. It will load the following modulefiles:

apps/intel-18.0/relion/VERSION-ARCH # Matches the version in the -all name apps/intel-18.0/ctffind/4.1.13 # ctffind3 is also available on the CSF3 apps/intel-18.0/unblur/1.0.2 apps/intel-18.0/summovie/1.0.2 apps/binapps/gctf/1.06

If you wish to use a different version of the external tools than that provided by the -all modulefile then load the modulefile without the -all in the name and then load the individual modulefiles needed for each external tool.

3.1 beta versions

More info…

This version has been compiled with the Intel 18.0.3 compiler.

# NOTE: Currently the CSF3 will only do single-node multi-core CPU and GPU jobs. You cannot # run larger multi-node jobs. # A new build of the 3.1-beta source (includes relion_convert_to_tiff[_mpi]). Commit 06afb9f. module load apps/intel-18.0/relion/3.1-beta2-gpu-sp-all # Single-precision GPU module load apps/intel-18.0/relion/3.1-beta2-cpuacc-sp-all # Single-precision CPU-Accelerated module load apps/intel-18.0/relion/3.1-beta2-cpu-sp-all # Single-precision CPU # Older build of the 3.1-beta source. Commit 7f8d1d6. module load apps/intel-18.0/relion/3.1-beta-gpu-sp-all # Single-precision GPU module load apps/intel-18.0/relion/3.1-beta-cpuacc-sp-all # Single-precision CPU-Accelerated module load apps/intel-18.0/relion/3.1-beta-cpu-sp-all # Single-precision CPU

The modulefile apps/intel-18.0/relion/VERSION-ARCH-all is a convenience modulefile to load several other modulefile that you will usually need to use relion successfully. It will load the following modulefiles:

apps/intel-18.0/relion/VERSION-ARCH # Matches the version in the -all name apps/intel-18.0/ctffind/4.1.13 # ctffind3 is also available on the CSF3 apps/intel-18.0/unblur/1.0.2 apps/intel-18.0/summovie/1.0.2 apps/binapps/gctf/1.06

If you wish to use a different version of the external tools than that provided by the -all modulefile then load the modulefile without the -all in the name and then load the individual modulefiles needed for each external tool.

3.0.8 version

This version has been compiled with the Intel 18.0.3 compiler.

More info…

# NOTE: Currently the CSF3 will only do single-node multi-core CPU and GPU jobs. You cannot # run larger multi-node jobs. module load apps/intel-18.0/relion/3.0.8-gpu-sp-all # Single-precision GPU module load apps/intel-18.0/relion/3.0.8-cpuacc-sp-all # Single-precision CPU-Accelerated module load apps/intel-18.0/relion/3.0.8-cpu-sp-all # Single-precision CPU

The modulefile apps/intel-18.0/relion/3.0.8-ARCH-all loads:

apps/intel-18.0/relion/3.0.8-ARCH # Matches the version in the -all name apps/intel-18.0/ctffind/4.1.13 # ctffind3 is also available on the CSF3 apps/intel-18.0/unblur/1.0.2 apps/intel-18.0/summovie/1.0.2 apps/binapps/gctf/1.06

If you wish to use a different version of the external tools than that provided by the -all modulefile then load the modulefile without the -all in the name and then load the individual modulefiles needed for each external tool.

3.0.6 version

This version has been compiled with the Intel 18.0.3 compiler.

More info…

# NOTE: Currently the CSF3 will only do single-node multi-core CPU and GPU jobs. You cannot # run larger multi-node jobs. module load apps/intel-18.0/relion/3.0.6-gpu-sp-all # Single-precision GPU code

The modulefile apps/intel-18.0/relion/3.0.6-gpu-sp-all loads:

apps/intel-18.0/relion/3.0.6-gpu-sp # Matches the version in the -all name apps/intel-18.0/ctffind/4.1.13 # ctffind3 is also available on the CSF3 apps/intel-18.0/unblur/1.0.2 apps/intel-18.0/summovie/1.0.2 apps/binapps/gctf/1.06

If you wish to use a different version of the external tools than that provided by the -all modulefile then load the modulefile without the -all in the name and then load the individual modulefiles needed for each external tool.

3.0-beta version

This version has been compiled with the Intel 17.0.7 compiler.

More info…

# NOTE: Currently the CSF3 will only do single-node multi-core CPU and GPU jobs. You cannot # run larger multi-node jobs. # Version 3.0-beta CPU-only + default external tools (see below) - RECOMMENDED module load apps/intel-17.0/relion/3.0-beta-cpu-dp-all # Double-precision CPU-only code module load apps/intel-17.0/relion/3.0-beta-cpu-sp-all # Single-precision CPU-only code # ... as above without default external tools module load apps/intel-17.0/relion/3.0-beta-cpu-dp module load apps/intel-17.0/relion/3.0-beta-cpu-sp # Version 3.0-beta GPU (nvidia v100) + default external tools (see below) - RECOMMENDED module load apps/intel-17.0/relion/3.0-beta-gpu-dp-all # Double-precision GPU code module load apps/intel-17.0/relion/3.0-beta-gpu-sp-all # Single-precision GPU code # ... as above without default external tools module load apps/intel-17.0/relion/3.0-beta-gpu-dp module load apps/intel-17.0/relion/3.0-beta-gpu-sp # Version 2.1 CPU and GPU module load apps/intel-17.0/relion/2.1-cpu # CPU-only module load apps/intel-17.0/relion/2.1-gpu-dp # Double-precision GPU code module load apps/intel-17.0/relion/2.1-gpu-sp # Single-precision GPU code

The modulefiles with -all in their name are helper modulefiles which simply load the main relion modulefile (for the corresponding version) and the modulefiles for the external tools used by relion. To see what is loaded run:

module show apps/intel-17.0/relion/3.0-beta-cpu-dp-all

The modulefile apps/intel-17.0/relion/3.0-beta-cpu-dp-all loads:

apps/intel-17.0/relion/3.0-beta-cpu-sp # Matches the version in the -all name apps/intel-17.0/ctffind/4.1.10 # ctffind3 is also available on the CSF3 apps/intel-17.0/unblur/1.0.2 apps/intel-17.0/summovie/1.0.2 apps/binapps/gctf/1.06

If you wish to use a different version of the external tools than that provided by the -all modulefile then load the modulefile without the -all in the name and then load the individual modulefiles needed for each external tool.

All modulefiles will also load automatically the compiler and MPI modulefiles for you.

Running the application

Relion can be run with a GUI on the login node. This is mainly used to select input files and set up job parameters. The GUI will then submit your job to the batch queues.

Users must always run the main processing in batch (where the GUI permits submission to the batch system), never directly on the login node. This requires correct settings in the GUI, as instructed below.

Relion tools other than the GUI found running on the login node will be terminated without warning.

Please note, a tutorial on using Relion to process your data is beyong the scope of this page, which only describes how to run the application on the CSF. Please follow the Relion Turorial (pdf) on the Relion website if you are new to the software.

Running the GUI

Once the modulefile file is loaded you should navigate to your Relion project directory (this is important – it will usually contain a Micrographs subdirectory) and then run relion on the login node. For example:

cd ~/scratch/my_relion_project/ relion

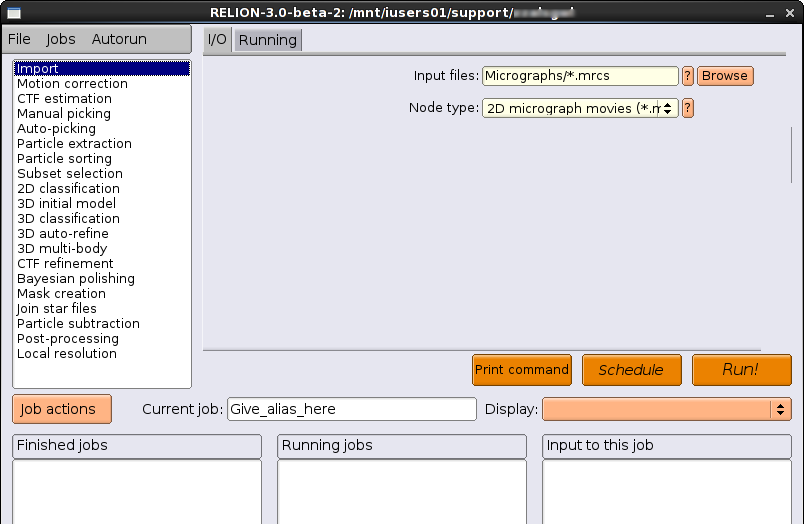

It will display the following GUI (click on image to enlarge):

Various operations can be performed by selecting from the list on the left hand side (Micrograph inspection, CTF estimation and so on). These operations allow you to specify use of the batch system, as detailed below.

Note: To re-activate a greyed-out Run button, press Alt+R in the GUI.

Using the GUI to run in Batch

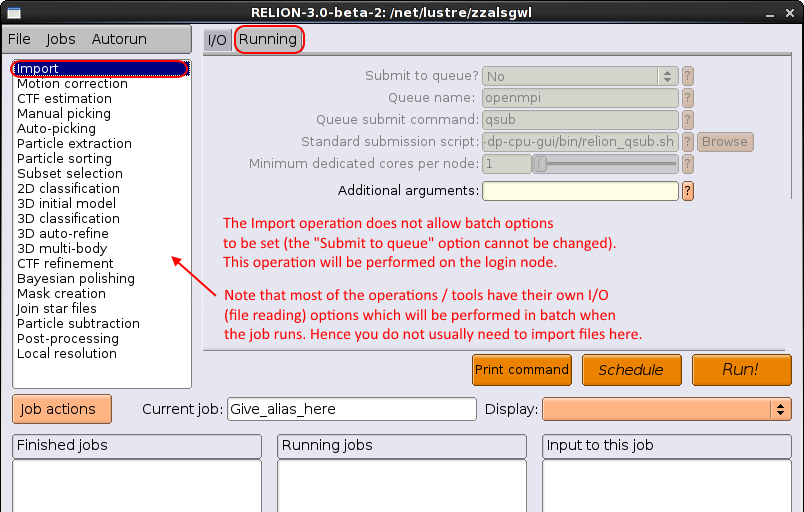

Each Relion processing operation (Micrograph inspection, CTF estimation etc) has a Running tab in its GUI page. There are three possible types of Running tab:

- Greyed-out (e.g., select Import from the list). This operation does not require the batch system:

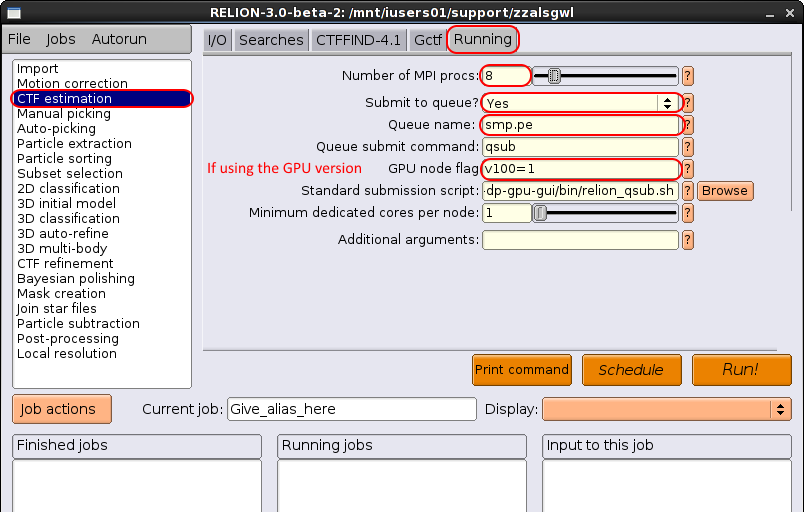

- Active for MPI job (e.g., select CTF estimation from the list). This operation will generate a batch script and submit it:

The options should be set as follows:- Number of MPI procs: 1 for serial jobs, 2-32 for single-node jobs using Intel compute nodes (see Queue name below), 2-168 for single-node jobs using AMD compute nodes (see Queue name below). We recommend using single-node, 2-32 cores initially. If using a GPU node you must use

smp.peand between 2-8 CPU cores per v100 GPU or 2-12 CPU cores per A100 GPU. Hence if, for example you request 2 v100 GPUs you can request up to 16 CPU cores insmp.pe. - Submit to queue?: You must set this to Yes. If you fail to do this your job will run on the login node and will be killed by the sysadmins. Users found repeatedly forgetting to turn on the Submit to queue option will be banned from using this software.

- Queue name: Use

smp.pefor single node jobs (2-32 cores) on Intel compute nodes oramd.pefor single node jobs (2-168 cores) on AMD compute nodes. - If using the GPU version: GPU node flag: Use

v100=NORa100=NwhereNis the number of required GPUs. This should be between 1 and 4 depending on your granted access to the GPU nodes (permission to use the GPUs must be requested – without the appropriate settings applied to your CSF account by the sysadmins, GPU jobs will not run.) For the Queue name, you must usesmp.peand between 2-8 CPU cores per v100 GPU or 2-12 CPU cores per A100 GPU. - Queue submit command: leave as

qsub - Standard submission script: leave as

/opt/apps/apps/intel-XY.Z/relion/version/bin/relion_qsub.sh

- Additional arguments: leave blank

- Number of MPI procs: 1 for serial jobs, 2-32 for single-node jobs using Intel compute nodes (see Queue name below), 2-168 for single-node jobs using AMD compute nodes (see Queue name below). We recommend using single-node, 2-32 cores initially. If using a GPU node you must use

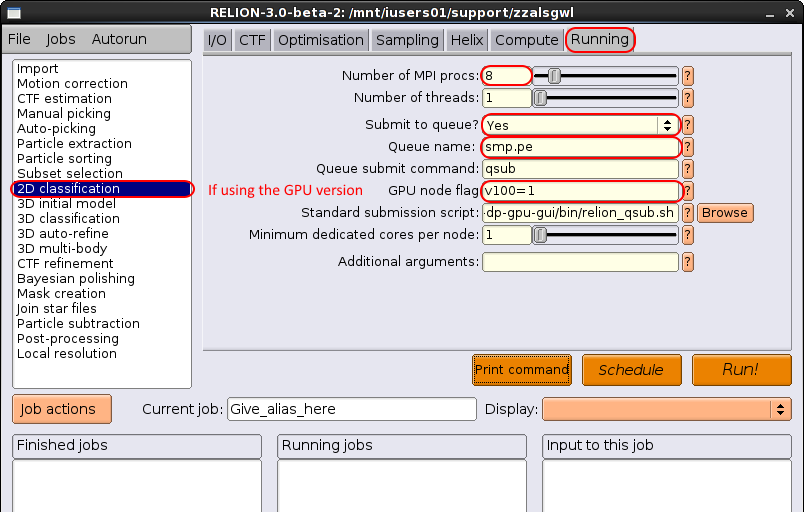

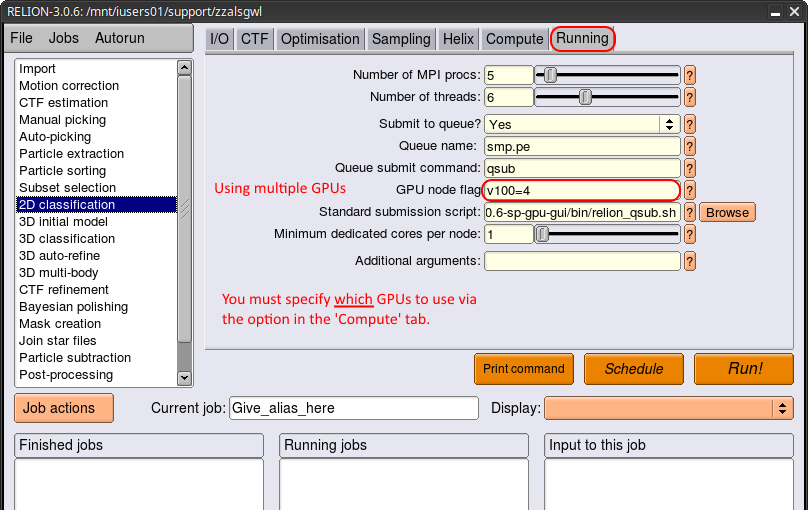

- Active for MPI+OpenMP job (e.g., select 2D classification from the list). This operation will generate a batch script and submit it:

The options should be set as follows:- Number of MPI procs: 1 for serial jobs, 2-32 for single-node jobs using Intel compute nodes (see Queue name below), 2-168 for single-node jobs using AMD compute nodes (see Queue name below). We recommend using single-node, 2-32 cores initially. If using a GPU node you must use

smp.peand between 2-8 CPU cores per v100 GPU or 2-12 CPU cores per A100 GPU. Hence if, for example you request 2 v100 GPUs you can request up to 16 CPU cores insmp.pe. - Number of threads: you can leave as 1 or specify the number of threads each MPI process will use. Note that in Relion v3.0.6, 3.0.8 and 3.1-beta (and later) the number of cores requested by the batch script will be Num MPI Procs X num threads. For example if you request 2 MPI processes with each one running 4 threads, the jobscript will correctly request 8 cores for the batch job. In versions installed before 3.0.6, you must load an extra modulefile named:

apps/intel-17.0/relion/corestest

for the correct number of cores to be calculated if you request more than one thread.

- Submit to queue?: You must set this to Yes. If you fail to do this your job will run on the login node and will be killed by the sysadmins. Users found repeatedly forgetting to turn on the Submit to queue option will be banned from using this software.

- Queue name: Use

smp.pefor single node jobs (2-32 cores) on Intel compute nodes oramd.pefor single node jobs (2-168 cores) on AMD compute nodes. - If using the GPU version: GPU node flag: Use

v100=NORa100=NwhereNis the number of required GPUs. This should be between 1 and 4 depending on your granted access to the GPU nodes (permission to use the GPUs must be requested – without the appropriate settings applied to your CSF account by the sysadmins, GPU jobs will not run.) For the Queue name, you must usesmp.peand between 2-8 CPU cores per v100 GPU or 2-12 CPU cores per A100 GPU. - Queue submit command: leave as

qsub - Standard submission script: leave as

/opt/apps/apps/intel-XY.Z/relion/version/bin/relion_qsub.sh

- Additional arguments: leave blank

- Number of MPI procs: 1 for serial jobs, 2-32 for single-node jobs using Intel compute nodes (see Queue name below), 2-168 for single-node jobs using AMD compute nodes (see Queue name below). We recommend using single-node, 2-32 cores initially. If using a GPU node you must use

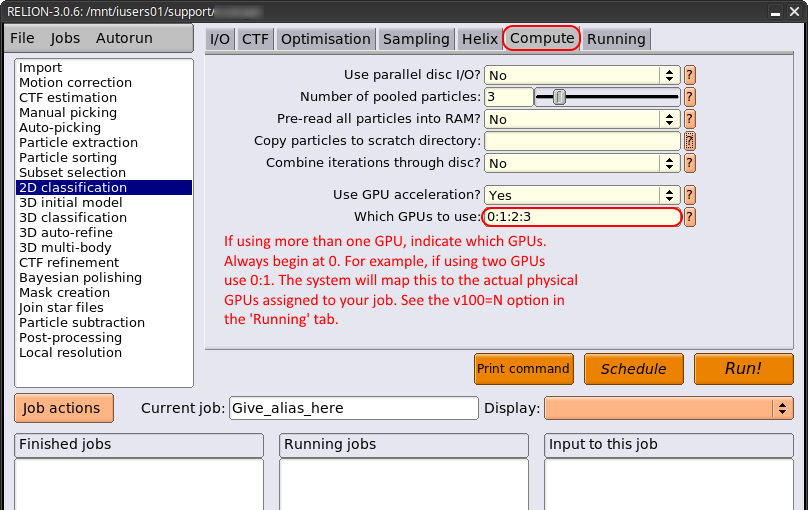

- If using multiple GPUs you must also specify how many GPUs to use and also which GPUs to use. This is done via the Running tab and the Compute tab. For example, suppose you wish to use all 4 GPUs on a node, first you need to request this many GPUs:

You must then indicate the GPU ids to use on the Compute tab.

The GPU IDs should always begin with0and further GPUs can be added by separating the IDs with colons. For example, if using two GPUs you would specify0:1. These IDs will be mapped to the physical GPUs assigned to your job.

Submitting the Batch Job from the GUI

Once you have set the Running tab options as indicated above press the Run! button to submit an auto-generated jobscript to the batch system. It may appear that nothing has happened when you do this. However, you should check on the job using the usual qstat command by running it in a command-line window on the CSF. It will report whether your job is queued or running. If you see no output then you job has finished (either successfully or because an error occurred).

The auto-generated jobscript will be written to your current directory (your Relion project directory) and will be named similar to the operation being performed with a .script extension. For example:

run_ctffind_submit.script

The usual output and error output from the job will be written to files with .out and .err extensions respectively. You should check these for further information about your job. For example:

run_ctffind.out run_ctffind.err

Summary

- Where the Running panel is active, ensure you set the Submit to Queue option to be Yes.

- Select the number of MPI procs to use.

- If available, the number of threads specifies how many threads each MPI process will use. The batch job will request num MPI proc X num threads cores in the batch system.

- Set the Queue Name so be smp.pe or amd.pe.

- Pressing the Run! button will submit an auto-generated jobscript to the batch system.

- Check on your job by running

qstatin a terminal window on the CSF.

Advanced: Custom Batch Script Template

This section is for advanced users familiar with Relion and the CSF batch system. In most cases the default settings will work for you and you should not need to follow this section.

Relion auto-generates jobscripts by filling in place-holders in a qsub template file named relion_qsub.sh. It is possible for you to take a copy of this file, modify it and have Relion use your new template each time you submit a job via the Relion GUI. The following steps are required:

- Exit Relion if the GUI is currently running

- Copy the system-wide template file (for example in to a directory in your home area):

cp $RELION_QSUB_TEMPLATE ~/my_relion_project/

- Edit the template (see below for more information):

gedit ~/my_relion_project/qsub.sh

- Update the environment setting indicating where the template file is:

export RELION_QSUB_TEMPLATE=~/my_relion_project/qsub.sh

- Restart Relion:

relion

The default template file contains:

#!/bin/bash ### Requests XXXcoresXXX in batch which is number of mpiprocs X threads #$ -pe XXXqueueXXX XXXcoresXXX #$ -e XXXerrfileXXX #$ -o XXXoutfileXXX #$ -cwd #$ -V #$ -l "XXXextra1XXX" # If using the GPU version will request v100 GPUs # If using the CPU version, can be blank ("") or # can request an CPU architecture (e.g., skylake) mpiexec -n XXXmpinodesXXX XXXcommandXXX

The XXX.....XXX keywords are the place-holders that will be replaced with the settings you specify in the Relion GUI. You should leave the -pe setting as is. However, you may wish to add other CSF options such as

#$ -m ea # Email me when job ends or aborts #$ -M my.email@manchester.ac.uk # You must supply an email address

Alternatively you may want to add other commands to the jobscript, for example:

# Report the date before running date mpiexec -n XXXmpinodesXXX XXXcommandXXX

Advanced: Hand-crafting Jobscripts

If you wish to submit a batch job manually from the command-line (using your own batch script) in the traditional CSF manner, then you can ask Relion to display the command it will use in its auto-generated jobscript.

Start the Relion GUI, select the operation to perform (e.g., 2D Classification), select the Running tab, make any changes to the settings then press the Print command button to display in your shell window what Relion uses in its jobscript.

For example, the following is displayed for the 2D Classification example above:

`which relion_refine_mpi` --o Class2D/run1 --i particles.star \

--dont_combine_weights_via_disc --preread_images --pool 1000 --pad 2 --ctf \

--iter 25 --tau2_fudge 2 --particle_diameter 275 --fast_subsets --K 200 \

--flatten_solvent --zero_mask --strict_highres_exp 25 --oversampling 1 \

--psi_step 12 --offset_range 5 --offset_step 4 --norm --scale --j 1 --gpu

# #

# # Using the GPU version

#

# Number of threads

Hence you can write your own jobscript to run this command. Ensure that you add the usual mpirun command and specify the correct number of cores. For example:

#!/bin/bash #$ -cwd # Run from current directory #$ -V # Inherit modulefile settings #$ -pe smp.pe 8 # We'll use 8 cores (single-node). Can be 2-32. Remove line for serial job. # If using a GPU (see next flag) can be up to max 8 per v100 GPU. # For example, you can specify up to 16 cores if using 2 GPUs. # Add this line if using the GPU version #$ -l v100=1 # Number of GPUs (1-4) depending on access granted # Or use a100=1 for the A100 GPUs # If using the GPU node fast temporary storage, copy dataset to it. EG: cp -r ~/scratch/my_em_data_dir $TMPDIR # We must add the usual mpirun command as used in many CSF jobscripts mpirun -n $NSLOTS `which relion_refine_mpi` --o Class2D/run1 --i particles.star \ --dont_combine_weights_via_disc --preread_images --pool 1000 --pad 2 --ctf \ --iter 25 --tau2_fudge 2 --particle_diameter 275 --fast_subsets --K 200 \ --flatten_solvent --zero_mask --strict_highres_exp 25 --oversampling 1 \ --psi_step 12 --offset_range 5 --offset_step 4 --norm --scale --j 1 --gpu # # # # Using the GPU version # # Number of threads # Note: Files copied to $TMPDIR (fast node-local storage) will be deleted automatically # at the end of your job.

Notice that we have added mpirun -n $NSLOTS to the start of the command that Relion reported. Relion will automatically add this when it auto-generates a jobscript.

You should then submit your jobscript using the usual qsub command:

qsub myjobscript

where myjobscript is the name of your jobscript.

Further info

Updates

None.