| The CSF2 has been replaced by the CSF3 - please use that system! This documentation may be out of date. Please read the CSF3 documentation instead. To display this old CSF2 page click here. |

Running ParaView Entirely on the CSF (remote X)

Overview

This page describes how to run ParaView entirely on the CSF over a remote X connection. This is not the preferred method of running ParaView. However, if you are unable to install the ParaView GUI locally on your own computer then this method will work.

You will need to have an X-server installed on your local computer. See further information about how to start X-Windows and GUI applications on the CSF.

The two methods of running ParaView described here are:

- Running only the ParaView GUI interactively – for small datasets only

- Running the ParaView GUI interactively and multiple

pvserverprocesses to process and render your datsets in parallel – for large datasets

NOTE: In both cases the paraview GUI must not be run on the login node. You must run it interactively using qrsh as described below.

Running paraview GUI Interactively (small datasets)

After loading the ParaView modulefile submit an interactive job to the short resource. Note that interactive jobs will not run in the standard 7 day resource.

module load apps/gcc/paraview/3.14.1 qrsh -V -cwd -b y -l inter -l short paraview

The ParaView GUI will start once the system has scheduled your interactive session.

Full details of interactive job limits are given on the qrsh page.

Running MPI pvserver batch processes and paraview GUI Interactively (large datasets)

The paraview GUI should not be running at this point.

After loading the ParaView modulefile use a jobscript to launch the pvserver MPI processes. The choice of Parallel Environment (PE) depends on whether you want to use InfiniBand-connected nodes for multi-node larger jobs or a single compute node for a smaller jobs. InfiniBand-connected nodes require that the number of cores used is a multiple of 12 or 24 (and a minimum number of cores must be used). Single-node (SMP) jobs can use a smaller number of cores (up to 24).

pvserver in SMP (single-node smaller jobs)

Setup paraview by loading the following modulefile on the login node:

module load apps/gcc/paraview/3.14.1

(note that the 4.0.1 version cannot be used – the paraview GUI executable is not installed on the CSF in this version. It only has the off screen pvserver and pvbatch executables).

Create the following jobscript (e.g., naming it pvserver.sh):

#!/bin/bash #$ -S /bin/bash #$ -V #$ -cwd #$ -pe smp.pe 4 # Change number of cores (max 24) #$ -l short # # Optional (and max 12 cores must be used above) # Ensure the paraview modulefile is loaded mpirun -n $NSLOTS pvserver --use-offscreen-rendering

Then submit the above jobscript using

qsub jobscript

where jobscript is the name of your file (e.g., pvserver.sh)

pvserver over InfiniBand (larger multi-node jobs)

Set up paraview by loading the following modulefile on the login node:

module load apps/gcc/paraview/3.14.1-ib

(note that the 4.0.1 version cannot be used – the paraview GUI executable is not installed on the CSF in this version. It only has the off screen pvserver and pvbatch executables).

Create the following jobscript (e.g., naming it pvserver-ib.sh):

#$ -S /bin/bash

#$ -V

#$ -cwd

#$ -pe orte-24-ib.pe 48

#

# Change number of cores, MUST be multiple of 24.

# Ensure the paraview modulefile is loaded

mpirun -n $NSLOTS pvserver --use-offscreen-rendering

Then submit the above jobscript using

qsub jobscript

where jobscript is the name of your file (e.g., pvserver-ib.sh)

Find where the Batch Job is Running

You must now wait until the pvserver jobs are running. Use qstat to check the job queue. Once the state reported by qstat has changed from qw to r you will see something like:

job-ID prior name user state submit/start at queue slots ------------------------------------------------------------------------------------------------ 5724 0.05534 pvserver.q xxxxxxxx r 08/28/2012 17:06:57 R410-short.q@int01.prv.csf 4

Make a note of the queue value, specifically the hostname after the @. In this example we see int01 (you don’t need the .prv.csf….).

We now run the paraview GUI client and connect it to the rank 0 MPI process running on the backend nodes. To run paraview use:

qrsh -V -cwd -b y -l inter -l short paraview --server-url=cs://int01 # # Change int01 to match the hostname used by your pvserver batch job. # You MUST specify -l short for interactive (-l inter) jobs.

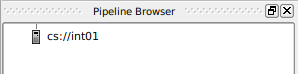

Once the qrsh command has been scheduled to run, the ParaView GUI will appear. The Pipeline Browser area should show an icon representing the backend node running the pvserver rank 0 process, as show below. This indicates you have successfully connected the paraview GUI to the backend pvserver processes. Data processing and rendering will be performed in parallel by the backend processes where possible, and only lightweight command-and-control actions will be performed by the GUI process.

Further information about the backend processes are available via the Help/About/Connection Information menu.