CIR Ecosystem

[[ Slide navigation:

| Forwards: | right arrow, space-bar or enter key |

| Reverse: | left arrow |

]]

The Data-Processing

Shared Facility

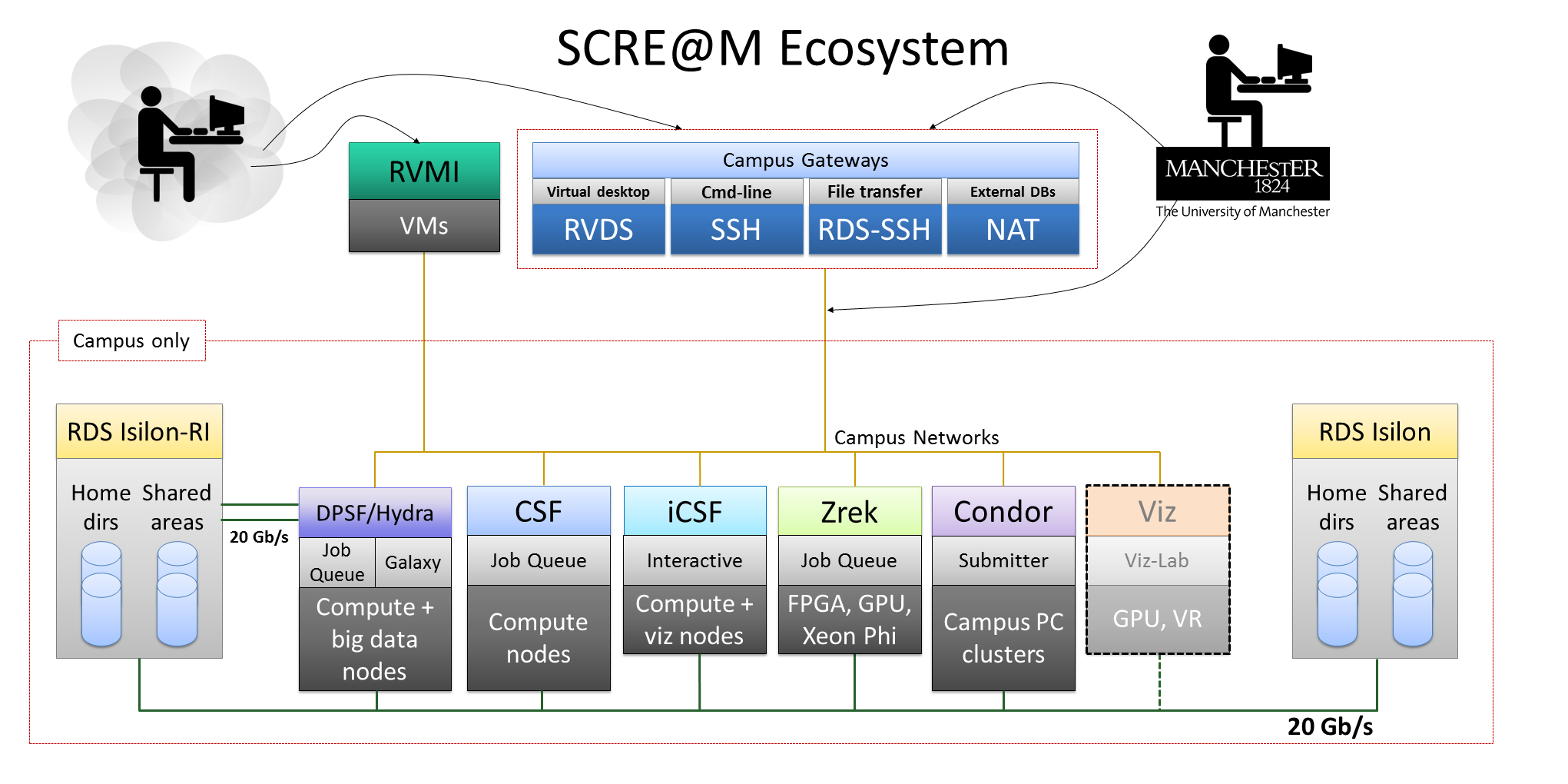

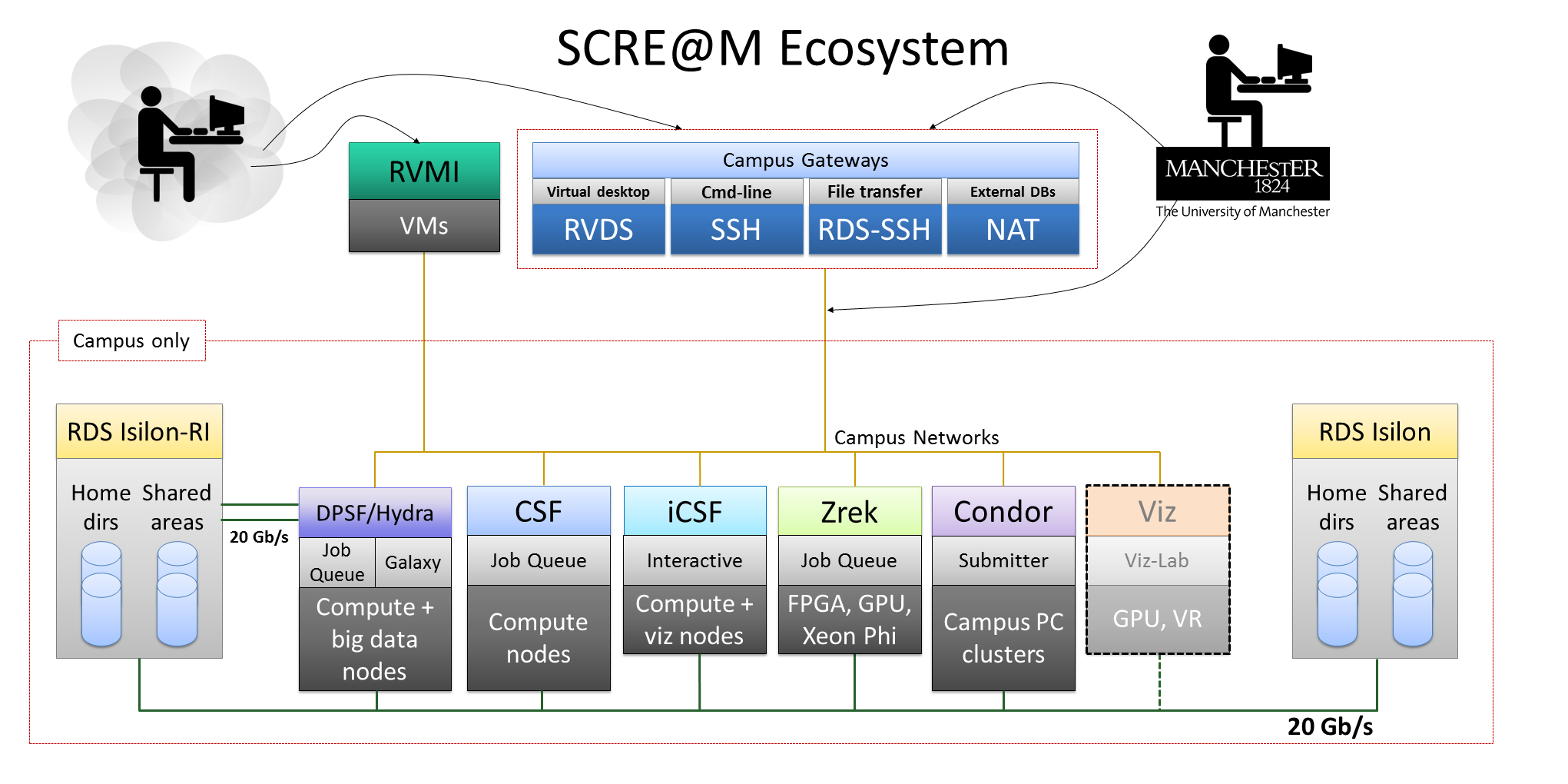

SCRE@M?

Tightly-integrated package of platforms/capabilities, including:

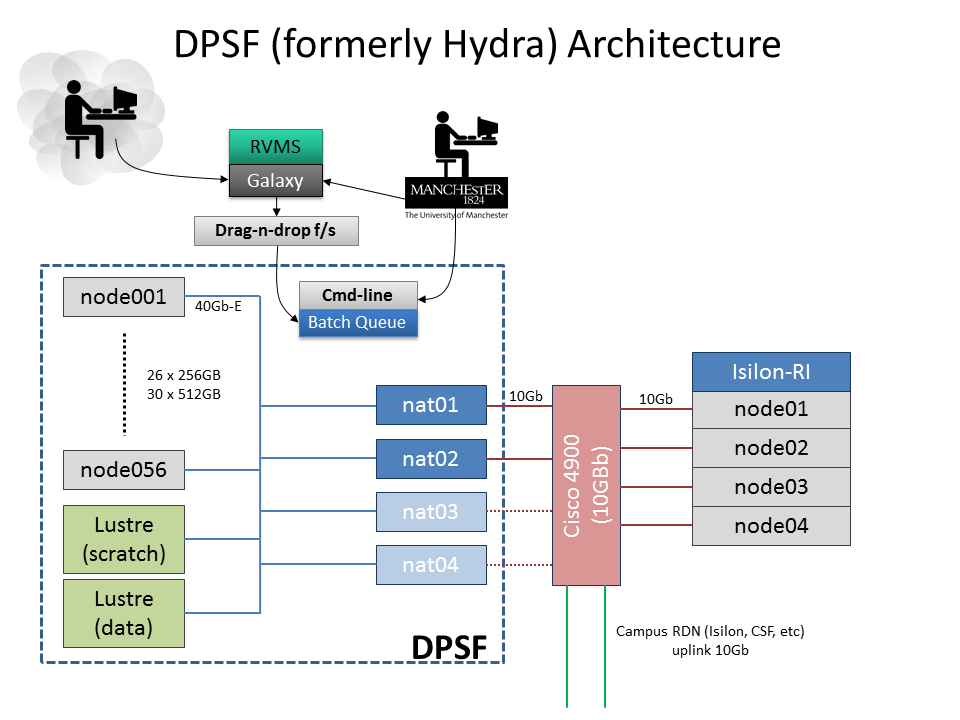

The Data-Processing Shared Facility

The DPSF is a new computational resource within SCRE@M designed for processing large amounts of data:

. . .dominated by EPS, but then. . .

. . .the nature of computational research changed — FLS/MHS came joined the party. . .

Dedicated Isilon cluster — "Isilon-RI":

Currently available to contributors only.

For more information about the SCRE@M and The DPSF please visit:

Also: